Quality of insurance information data

Our client is a major financial institution with presence mainly in Europe and America; among the three largest insurers in the market in Mexico and also a leader in the regions where it operates. It has undertaken a digital transformation in recent years, creating a state-of-the-art technology platform.

We at Bluetab Mexico are one of the group’s main partners and we collaborated with them by carrying out a thorough analysis to identify data quality problems in IT assets in their Insurance arm; performing re-engineering from the root of some processes to ensure information quality; as well as developing and automating processes to structure day-to-day information so that this meets the standards set in our client’s Big Data platform.

The scope of this project is to provide our client with mechanisms to let them take advantage of one of their competitive advantages, using the information they have about their clients in one of the largest portfolios in the country, and transform it into knowledge to offer them a better experience through a personalised offering.

An additional benefit is having reliable, available, ordered information of adequate quality to meet their needs for their regulatory and decision-making processes.

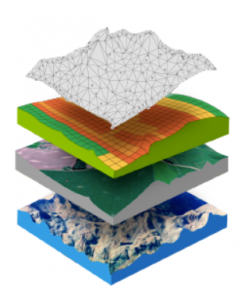

Another fundamental element is the open data platforms, from meteorological, satellite or geological to historical data in certain geographical areas. Crossing these with your own data enables from improved prediction of weather phenomena and their impact on crop ripening to predicting future crop volume and its value on the market.

Another fundamental element is the open data platforms, from meteorological, satellite or geological to historical data in certain geographical areas. Crossing these with your own data enables from improved prediction of weather phenomena and their impact on crop ripening to predicting future crop volume and its value on the market. Finally, a differentiating element is the self-driving vehicles from companies such as John Deere, which manufactures tractors that use the same artificial intelligence models as used in self-driving cars as sophisticated as Alphabet’s Waymo. Image recognition models allow for positioning and measurement actions that reduce herbicide or fertiliser applications by 70 to 90%. It should be noted that approximately 50% of fertilisers are lost to the environment under normal conditions.

Finally, a differentiating element is the self-driving vehicles from companies such as John Deere, which manufactures tractors that use the same artificial intelligence models as used in self-driving cars as sophisticated as Alphabet’s Waymo. Image recognition models allow for positioning and measurement actions that reduce herbicide or fertiliser applications by 70 to 90%. It should be noted that approximately 50% of fertilisers are lost to the environment under normal conditions.

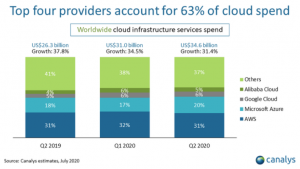

We could say in general terms that the AWS platform currently leads its competitors in terms of market position, although it has seen a small drop in its position in the last year. And this means that, in markets such Spain and Mexico, our perception is that the number of resources available is also greater.

We could say in general terms that the AWS platform currently leads its competitors in terms of market position, although it has seen a small drop in its position in the last year. And this means that, in markets such Spain and Mexico, our perception is that the number of resources available is also greater.